- Ant Group uses Chinese chips and MoE models to cut AI training costs and reduce reliance on Nvidia.

- Releases open-source AI models, claiming strong benchmark results with domestic hardware.

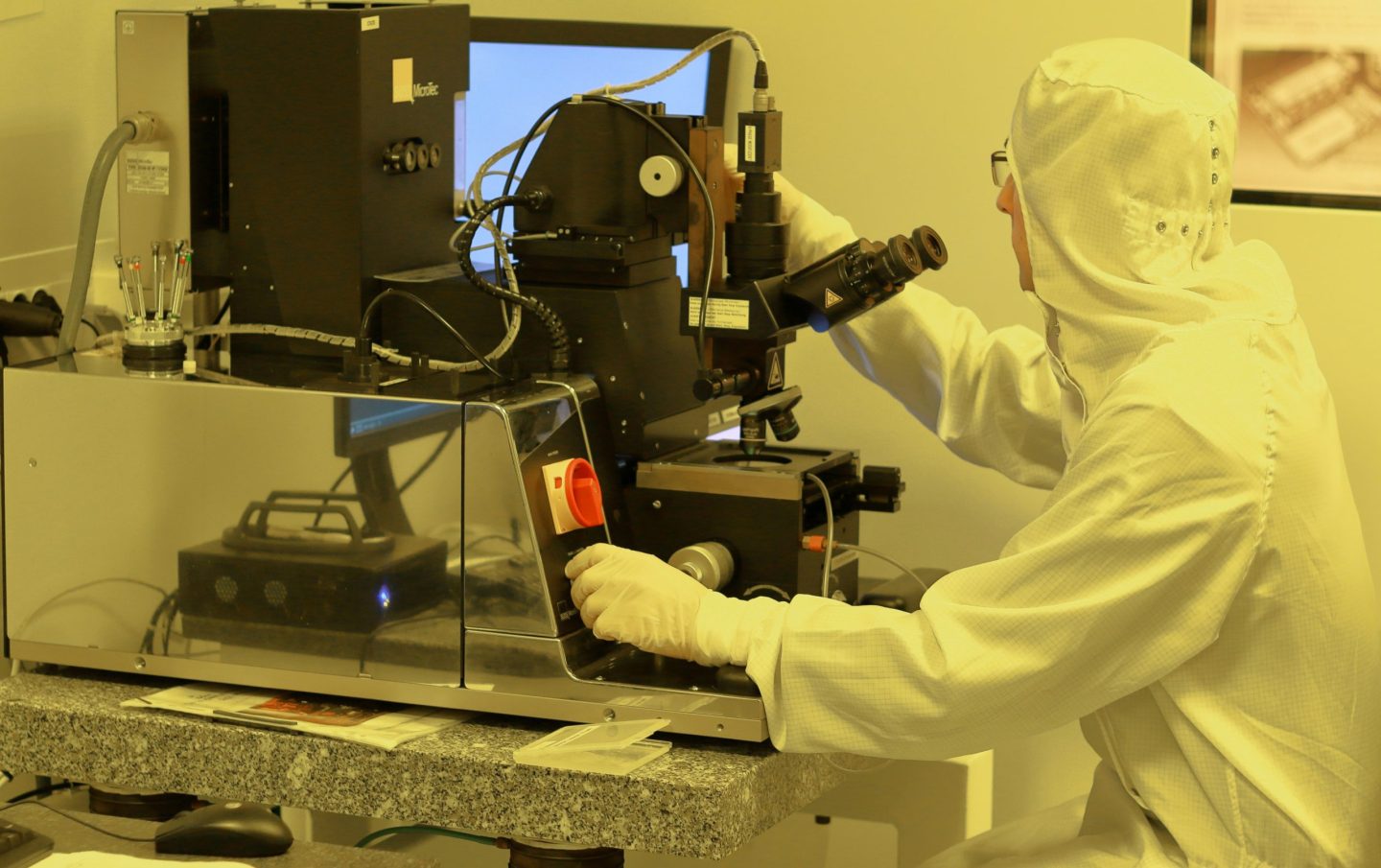

Chinese Alibaba affiliate company, Ant Group, is exploring new ways to train LLMs and reduce dependency on advanced foreign semiconductors.

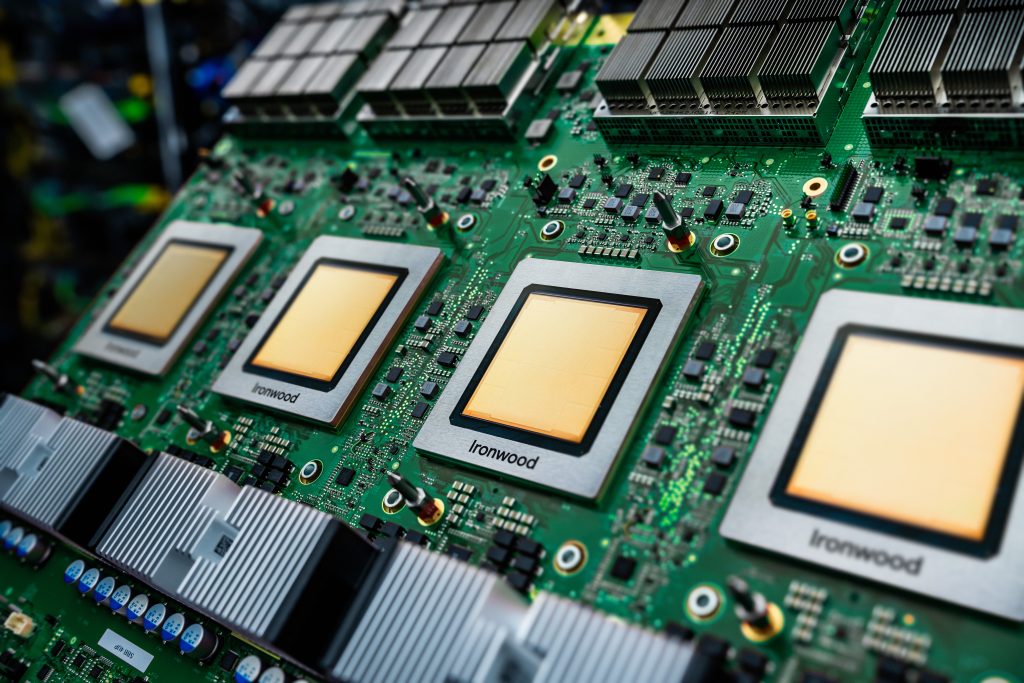

According to people familiar with the matter, the company has been using domestically-made chips – including those supplied by Alibaba and Huawei – to support the development of cost-efficient AI models through a method known as Mixture of Experts (MoE).

The results have reportedly been on par with models trained using Nvidia’s H800 GPUs, which are among the more powerful chips currently restricted from export to China. While Ant continues to use Nvidia hardware for certain AI tasks, sources said the company is shifting toward other options, like processors from AMD and Chinese alternatives, for its latest development work.

The strategy reflects a broader trend among Chinese firms looking to adapt to ongoing export controls by optimising performance with locally available technology.

The MoE approach has grown in popularity in the industry, particularly for its ability to scale AI models more efficiently. Rather than processing all data through a single large model, MoE structures divide tasks into smaller segments handled by different specialised “experts.” The division helps reduce the computing load and allows for better resource management.

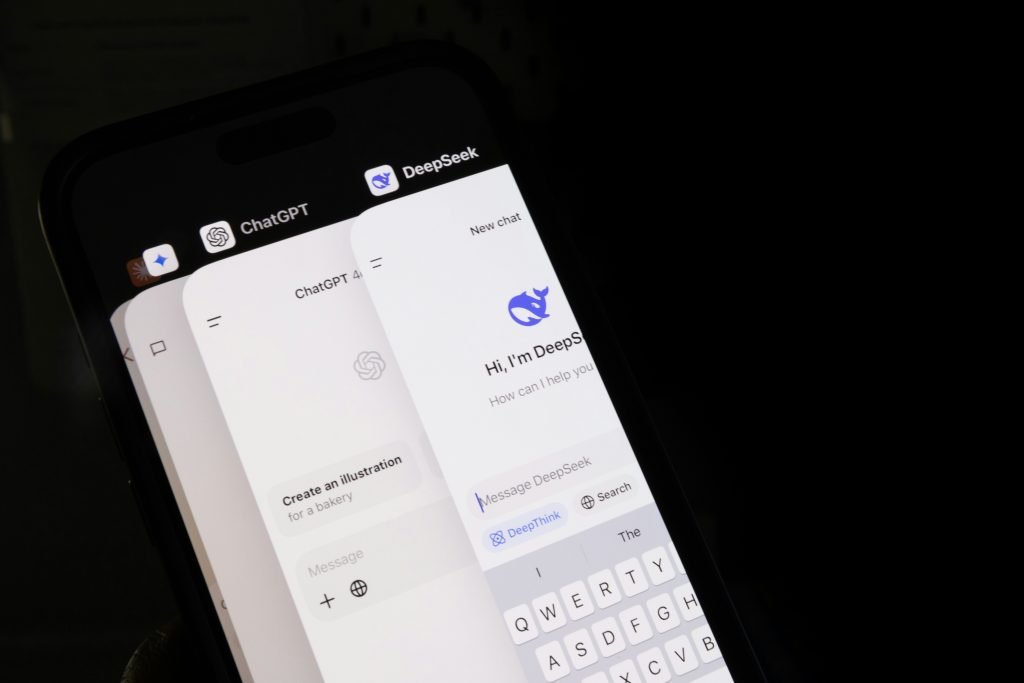

Google and China-based startup DeepSeek have also applied the method, seeing similar gains in training speed and cost-efficiency.

Ant’s latest research paper, published this month, outlines how the company has been working to lower training expenses by not relying on high-end GPUs. The paper claims the optimised method can reduce the cost of training 1 trillion tokens from around 6.35 million yuan (approximately $880,000) using high-performance chips to 5.1 million yuan, using less advanced, more readily-available hardware. Tokens represent pieces of information that AI models process during training to learn patterns, in order to generate text, or complete tasks.

According to the paper, Ant has developed two new models – Ling-Plus and Ling-Lite – which it now plans to offer in various industrial sectors, including finance and healthcare. The company recently acquired Haodf.com, an online medical services platform, as part of its broader push for AI-driven healthcare services. It also runs the AI life assistant app Zhixiaobao and a financial advisory platform known as Maxiaocai.

Ling-Plus and Ling-Lite have been open-sourced, with the former consisting of 290 billion parameters and the latter 16.8 billion. Parameters in AI are tunable elements that influence a model’s performance and output. While these numbers are smaller than the parameter count anticipated for advanced models like OpenAI’s GPT-4.5 (around 1.8 trillion), Ant’s offerings are nonetheless regarded as sizeable by industry standards.

For comparison, DeepSeek-R1, a competing model also developed in China, contains 671 billion parameters.

In benchmark tests, Ant’s models were said to perform competitively. Ling-Lite outpaced a version of Meta’s Llama model in English-language understanding, while both Ling models outperformed DeepSeek’s offerings on Chinese-language evaluations. The claims, however, have not been independently verified.

The paper also highlighted some technical challenges the organisation faced during model training. Even minor adjustments to the hardware or model architecture resulted in instability, including sharp increases in error rates. These issues illustrate the difficulty of maintaining model performance while shifting away from high-end GPUs that have become the standard in large-scale AI development.

Ant’s research indicates a rise in effort among Chinese companies to achieve more technological self-reliance. With US export limitations limiting access to Nvidia’s most advanced chips, companies like Ant are seeking ways to build competitive AI tools using alternative resources.

Although Nvidia’s H800 chip is not the most powerful in its lineup, it remains one of the most capable processors available to Chinese buyers. Ant’s ability to train models of comparable quality without such hardware signals a potential path forward for companies affected by trade controls.

At the same time, the broader industry dynamics continue to evolve. Nvidia CEO Jensen Huang has said that increasing computational needs will drive demand for more powerful chips, even as efficiency-focused models gain traction. Despite alternative strategies like those explored by Ant, his view suggests that advanced GPU development will continue to be prioritised.

Ant’s effort to reduce costs and rely on domestic chips could influence how other firms approach AI training – especially in markets facing similar constraints. As China accelerates its push toward AI independence, developments like these are likely to draw attention across both the tech and financial landscapes.