- DeepSeek’s AI feedback systems help make AI understand what humans want.

- Method allows smaller AI models to perform as well as larger cousins.

- Potential to reduce cost of training.

Chinese AI company DeepSeek has developed a new approach to AI feedback systems that could transform how artificial intelligence learns from human preferences.

Working with Tsinghua University researchers, DeepSeek’s innovation tackles one of the most persistent challenges in AI development: teaching machines to understand what humans genuinely want from them. The breakthrough is detailed in a research paper “Inference-Time Scaling for Generalist Reward Modeling,” and introduces a technique making AI responses more accurate and efficient – a win-win in the AI world where better performance typically demands more computing power.

Teaching AI to understand human preferences

At the heart of DeepSeek’s innovation is a new approach to what experts call “reward models” – essentially the feedback mechanisms that guide how AI systems learn. Think of reward models as digital teachers. When an AI responds, models provide feedback on how good that response was, helping the AI improve over time. The problem has always been how to create reward models that accurately reflect human preferences across many different types of questions. DeepSeek’s solution combines two techniques:

- Generative Reward Modeling (GRM): Uses language to represent rewards, providing richer feedback than previous methods that relied on simple numerical scores.

- Self-Principled Critique Tuning (SPCT): Allows the AI to adaptively generate its guiding principles and critiques through online reinforcement learning.

Zijun Liu, a researcher from Tsinghua University and DeepSeek-AI who co-authored the paper, explains that this combination allows “principles to be generated based on the input query and responses, adaptively aligning reward generation process.”

Doing more with less

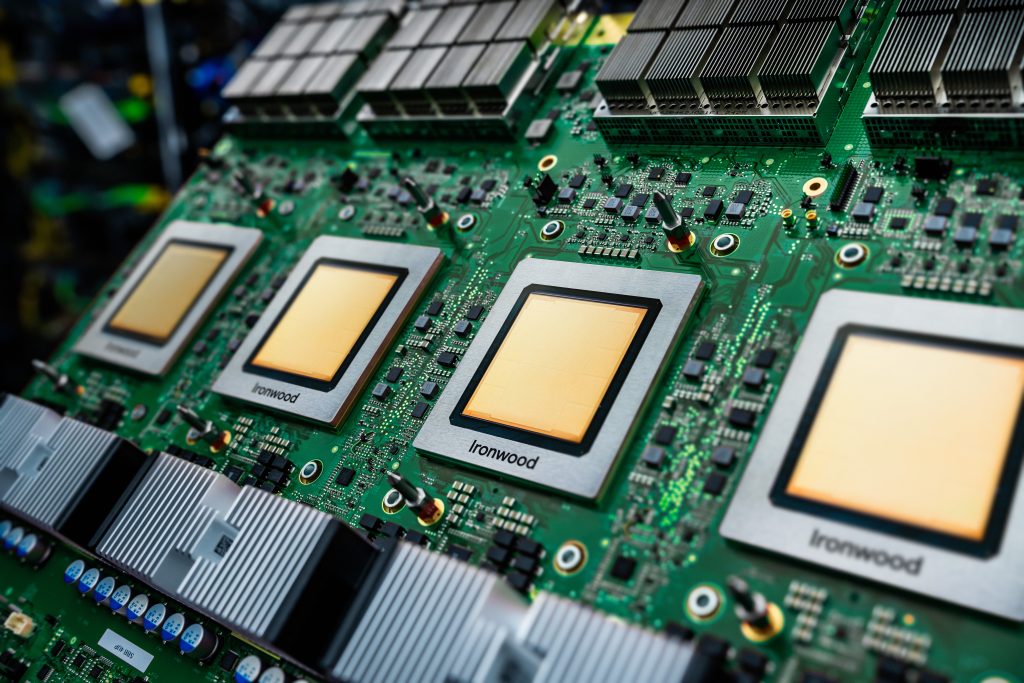

What makes DeepSeek’s approach particularly valuable is “inference-time scaling.” Rather than requiring more computing power during the training phase, the method allows for performance improvements when the AI is used – the ‘point of inference’.

The researchers demonstrated that their method achieves better results with increased sampling during inference, potentially allowing smaller models to match the performance of much larger ones. The efficiency breakthrough comes at a important moment in AI development when the relentless push for larger models raises concerns about sustainability, supply chain viability, and accessibility.

What this means for the future of AI

DeepSeek’s innovation in AI feedback systems could have far-reaching implications:

- More accurate AI responses: Better reward models mean AI systems receive more precise feedback, improving outputs over time.

- Adaptable performance: The ability to scale performance during inference allows AI systems to adjust to different computational constraints.

- Broader capabilities: AI systems can perform better across many tasks by improving reward modelling for general domains.

- Democratising AI development: If smaller models can achieve similar results to larger models via better inference methods, AI research could become more accessible to those with limited resources.

DeepSeek’s rising influence

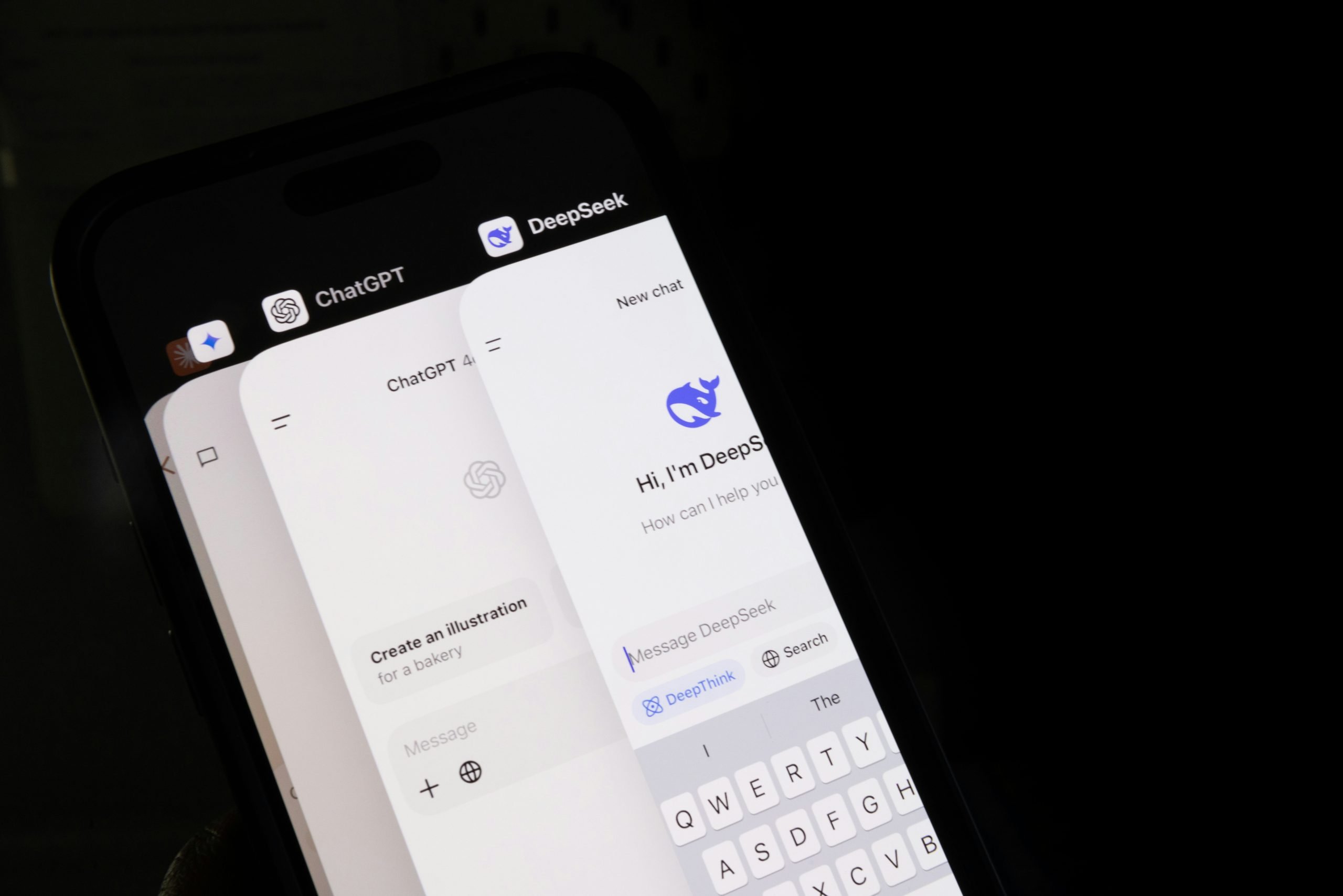

The latest advance adds to DeepSeek’s growing reputation in the AI field. Although founded only in 2023 by entrepreneur Liang Wenfeng, the Hangzhou-based company has made an impact with the V3 foundation model and R1 reasoning model. The company recently upgraded its V3 model (DeepSeek-V3-0324), which it said offered “enhanced reasoning capabilities, optimised front-end web development and upgraded Chinese writing proficiency.”

DeepSeek has also committed to open-source its AI technology, by opening five public code repositories in February which allow developers to review and contribute to software development.

According to the research paper, DeepSeek intends to make its GRM models open-source, although no specific timeline has been provided. Its decision could accelerate progress in the field by allowing broader experimentation with this type of advanced AI feedback system.

Beyond bigger is better

As AI continues to evolve rapidly, DeepSeek’s work demonstrates that innovations in how models learn can be just as important as increasing their size. By focusing on the quality and scalability of feedback, DeepSeek addresses one of the challenges to create AI that better understands and aligns with human preferences.

This possible breakthrough in AI feedback systems suggests the future of artificial intelligence may depend not just on raw computing power but on more intelligent and efficient methods that better capture the nuances of human preferences.