- Alibaba Cloud integrates DeepSeek AI models into its cloud.

- Move aligns with broader industry trend.

Alibaba Cloud is the latest of the world’s tech giants to jump onto the DeepSeek bandwagon, offering the Chinese AI startup’s models to its customers. The move isn’t surprising – Microsoft, Amazon, Huawei, and others have already started offering DeepSeek’s open-source AI models to their users, signalling a growing industry trend.

In a WeChat post, Alibaba Cloud pointed out how “effortless” it is for users to train, deploy, and run AI models – with no coding required. The company claims the approach streamlines AI development, making it faster and more accessible for businesses and developers.

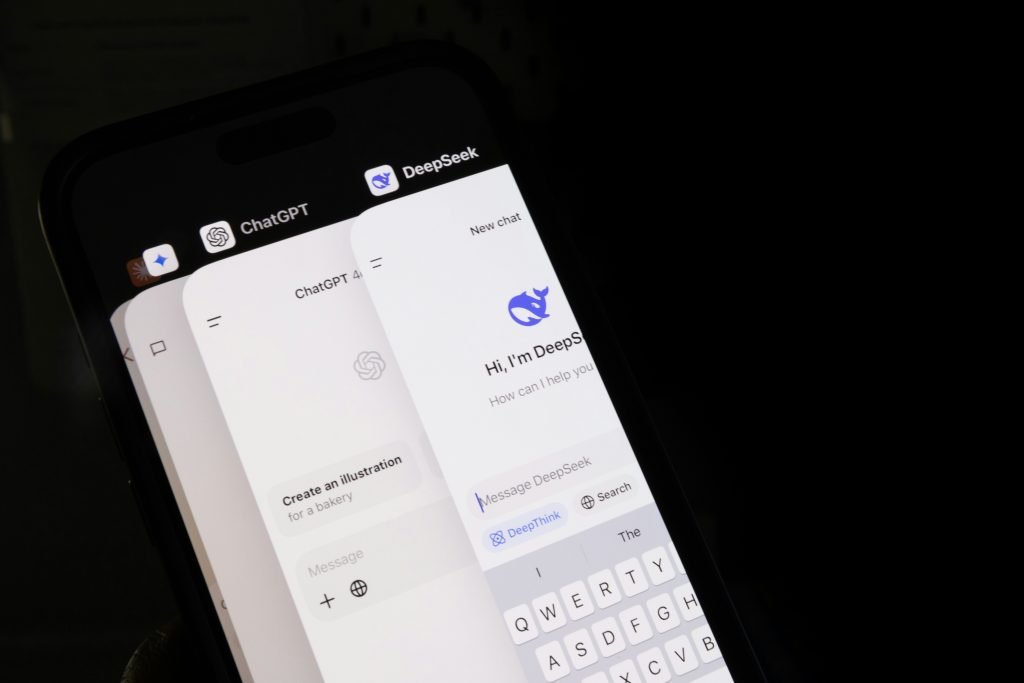

Alibaba Cloud users can now explore DeepSeek’s AI models in the PAI Model Gallery, a collection of open-source LLMs. The models can be used for everything from text generation to complex reasoning tasks. DeepSeek’s flagship models, DeepSeek-V3 and DeepSeek-R1, are particularly noteworthy, being designed to deliver high performance at a fraction of the cost and computing power typically required by industry heavyweights.

The Gallery also offers distilled versions of the larger model, like DeepSeek-R1-Distill-Qwen-7B, which provide similar capabilities while being more resource-efficient.

For those less familiar, LLMs power generative AI tools like the well-known ChatGPT from OpenAI. Open-source models give developers greater flexibility to tweak and refine AI capabilities, while model distillation – training smaller models to mimic the performance of larger ones – helps cut running (and training) costs without necessarily sacrificing too much performance.

Alibaba Cloud’s decision to incorporate DeepSeek’s models comes shortly after the company introduced its own Qwen 2.5-Max model, a direct competitor to DeepSeek-V3. Huawei’s decision to offer DeepSeek is part of a broader by major cloud providers.

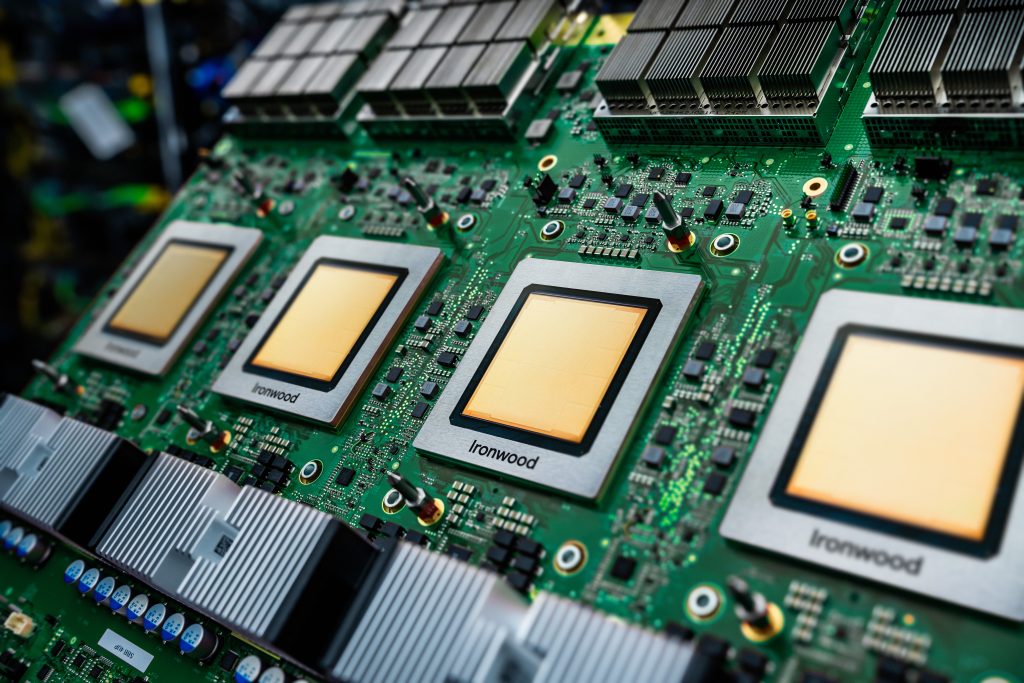

Huawei staff worked through the Lunar New Year holidays with AI infrastructure startup SiliconFlow to integrate DeepSeek’s V3 and R1 models into its Ascend cloud service. Huawei claims that the DeepSeek models perform as well as those running on premium global GPUs.

As reported by South China Morning Post, SiliconFlow, which hosts the DeepSeek models, is offering discounted access to V3 at 1 yuan (US$0.13) per 1 million input tokens and 2 yuan for 1 million output tokens, while R1 access is priced at 4 yuan and 16 yuan, respectively.

Huawei’s Ascend cloud service uses its proprietary hardware, including self-developed server clusters, AI modules, and accelerator cards, indicative of China’s push to reduce reliance on foreign technology in the face of US trade restrictions.

Tencent is also on board, offering DeepSeek’s R1 model on its cloud computing platform, where users can get up and running with just a three-minute setup, the company claims. Meanwhile, Nvidia has added DeepSeek-R1 to its NIM microservice, emphasising its advanced reasoning capabilities and efficiency across tasks like logical inference, maths, coding, and language understanding.

Other tech giants are making similar moves, including AWS, the world’s largest provider of remote compute. Microsoft, a key investor in OpenAI, recently introduced R1 support on its Azure cloud and GitHub platforms, allowing developers to build AI applications that run locally on Copilot+ PCs.

But amid all the hype, not everyone is convinced that DeepSeek as revolutionising AI. Some experts argue that the startup’s claimed cost savings may be overstated. Fudan University computer science professor Zheng Xiaoqing pointed out that DeepSeek’s reported low training costs don’t account for earlier research and development expenses.

In an interview with National Business Daily, he said that DeepSeek’s advantage is based on engineering optimisations rather than groundbreaking AI innovation, meaning that the company’s engineering methods may not shake up the AI chip industry as much as some predict.

For now, though, DeepSeek’s models are quickly gaining traction, with major cloud providers racing to integrate them. The long-term impact of this trend on the AI industry remains to be seen, but one thing is certain: the competition for AI dominance is heating up.

Want to learn more about cybersecurity and the cloud from industry leaders? Check out Cyber Security & Cloud Expo taking place in Amsterdam, California, and London.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.