- AI is disrupting the 2024 US election, and worldwide.

- Regulation slow, leaving elections vulnerable to manipulation.

For several years now, AI has disrupted the public’s ability to trust what it sees, hears, and reads. A noteworthy example is the Republican National Committee’s recent release of an AI-generated ad depicting an imagined nightmarish future in which President Joe Biden is re-elected. The advertisement included computer-generated visuals of devastated communities and border chaos.

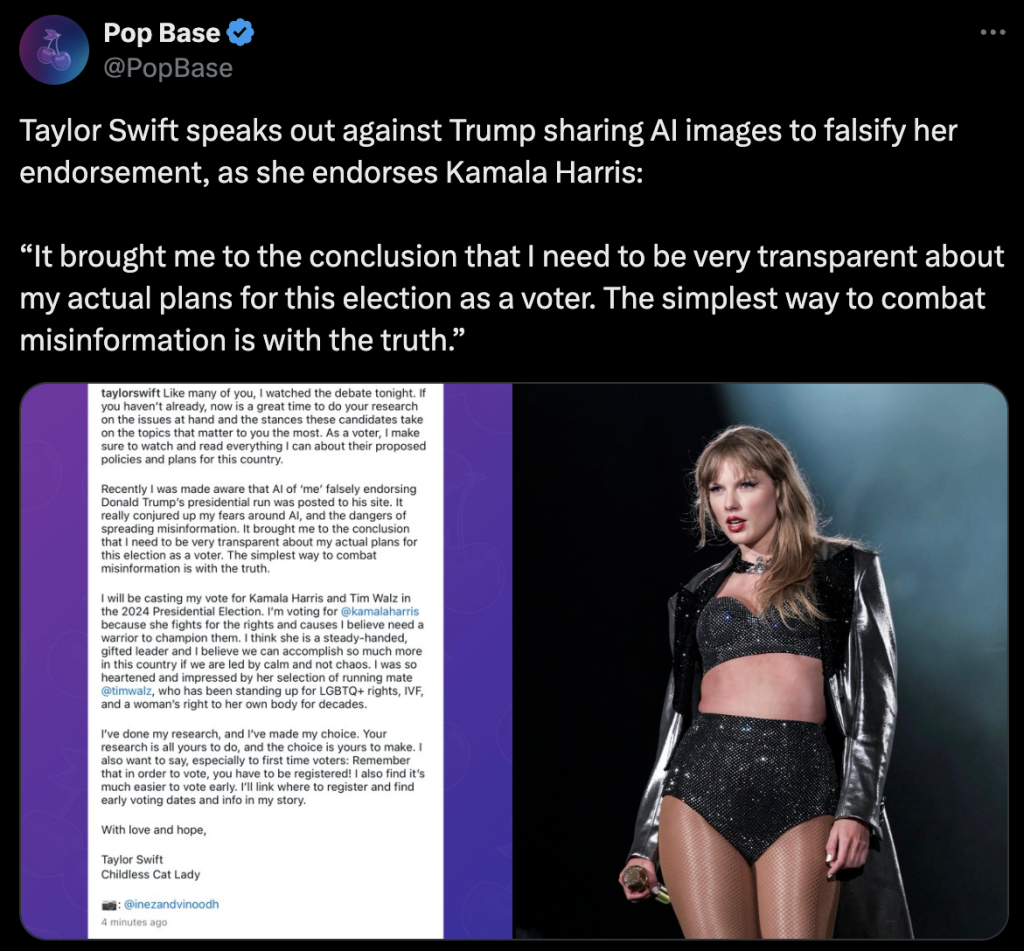

In another instance, robocalls falsely claiming to be from Biden discouraged voters in New Hampshire from participating in the 2024 primary. Over the summer, the US Department of Justice shut down a Russian bot operation that used AI to impersonate Americans on social media. OpenAI also took action against an Iranian group using ChatGPT to create misleading social media content.

It is uncertain what direct harm AI can cause to people at present. However, many have expressed grave concerns that the technology facilitates the creation of convincing and misleading information. There have been numerous attempts to regulate AI, with little progress made in areas of major problems, like the technology’s possible influence on the approaching US election in 2024.

A slow-moving effort toward regulation

In an attempt to address the challenges posed by AI, the Biden administration introduced a blueprint for an AI Bill of Rights two years ago, aiming to address issues like algorithmic discrimination and abusive data practices. Following that, an executive order on AI was issued in 2023. US Senate Majority Leader Chuck Schumer also organised an AI summit that included high-profile figures like Bill Gates, Mark Zuckerberg, and Elon Musk. On an international level, the United Kingdom hosted an AI Safety Summit, resulting in the “Bletchley Declaration,” encouraging global collaboration on AI regulation. These findings indicate that the risks of AI-driven election interference have not gone unnoticed.

Despite these efforts, little has been done to explicitly address the use of artificial intelligence in US political campaigns. The two federal agencies with the authority to act, the Federal Communications Commission (FCC) and the Federal Election Commission (FEC), have taken very limited action thus far. For example, the FCC proposed requiring political advertisements on television and radio to disclose the use of AI. However, these regulations are unlikely to go into effect before the 2024 election, and the proposals have already stirred partisan disagreement.

Challenges in regulating AI in politics

The Federal Election Commission recently ruled that it cannot enact new laws to control AI-generated content in political advertisements; instead, it must enforce existing rules against all forms of fraudulent misrepresentation, whether or not AI is used. Advocacy groups, like Public Citizen, say that such a “wait-and-see” strategy is insufficient, since the potential disruption introduced into the election may be too pervasive to combat.

One reason for the absence of decisive action could be the complex legal system governing political speech. The First Amendment protects free speech in the United States and generally permits misleading statements in political ads. Despite this, a majority of Americans in recent polls have voiced a desire for tougher regulations on AI-generated content in elections, with many advocating for the removal of candidates who use AI to deceive voters.

The widespread use of AI creates a big challenge for regulators. The technology is not limited to creating fake news or political advertisements; it can be used in a variety of ways, each with its own level of monitoring. While airbrushing candidate images may appear harmless, creating deepfakes to harm an opponent’s reputation goes too far. The technology is already being used to create personalised campaign messaging, but where should the limits be set? And how do we handle AI-generated memes circulating on social media?

Despite the slow pace of legislative action, some US states have taken steps to regulate AI in elections. California, for example, was the first state to pass laws prohibiting the use of manipulated media in political campaigns, and more than 20 other states have followed suit with similar regulations.

The global context: AI’s impact on elections

Concerns about AI-generated disinformation in elections have surfaced globally. For example, in last year’s Slovakian election, deepfakes were used to defame a political leader, perhaps affecting the result in favour of his pro-Russia opponent. Similarly, in January, the Chinese government was accused of tampering with the Taiwanese election using AI-generated deepfakes. A deluge of dangerous AI-authored content emerged in the UK ahead of the July 4 election, including a deepfake of BBC reporter Sarah Campbell, that claimed that Prime Minister Rishi Sunak had approved a fraudulent investment platform. Meanwhile, during India’s general election, deepfakes of deceased leaders were used to influence votes.

In some circumstances, AI plays a more complex role in campaigns. In Indonesia, a former general running for president used an AI-generated cartoon to connect with younger people, raising questions about his involvement in the country’s military regime, although in that instance, there was no blatant deception. Despite being imprisoned, Pakistani opposition leader Imran Khan addressed his supporters via an AI-generated video, circumventing efforts to silence him. In Belarus, the official opposition used an AI-generated “candidate” – a chatbot posing as a 35-year-old Minsk resident – as part of efforts to engage voters at a time when the country’s opposition exists mainly in exile.

International efforts like the Bletchley Declaration are a good start, but much more work has to be done. Legislative proposals like the AI Transparency in Elections Act, the Honest Ads Act, and the Protect Elections From Deceptive AI Act show promise in the US, but their success is questionable owing to resistance from civil liberties organisations and the tech industry.

This regulatory uncertainty presents an opportunity for tech companies. In the absence of clear rules, platforms can continue to offer AI tools, ad space, and data to political campaigns subject to only minimal oversight. While some tech companies have made voluntary pledges to limit AI’s role in elections, safeguards are frequently easy to bypass and unlikely to provide a long-term solution.

What’s next? The need for comprehensive reform

As Greg Schneier and others have noted, the fragmented regulatory environment makes it difficult to effectively address AI’s impact on elections. US agencies often find themselves in jurisdictional battles over which organisation should take the lead. Meanwhile, the public is left to navigate an increasingly complex landscape in which artificial intelligence is utilised to both inform and mislead. To stay up with the rapid advancements in AI, stronger governance, transparency, and reforms are important.

The upcoming elections in the US present a pivotal moment for addressing the issue of using AI in political campaigns. With concerns about election disinformation growing globally, it is critical for governments and regulatory bodies to act decisively and, more importantly, quickly to ensure fair and transparent elections – both now and in the future.